Summary

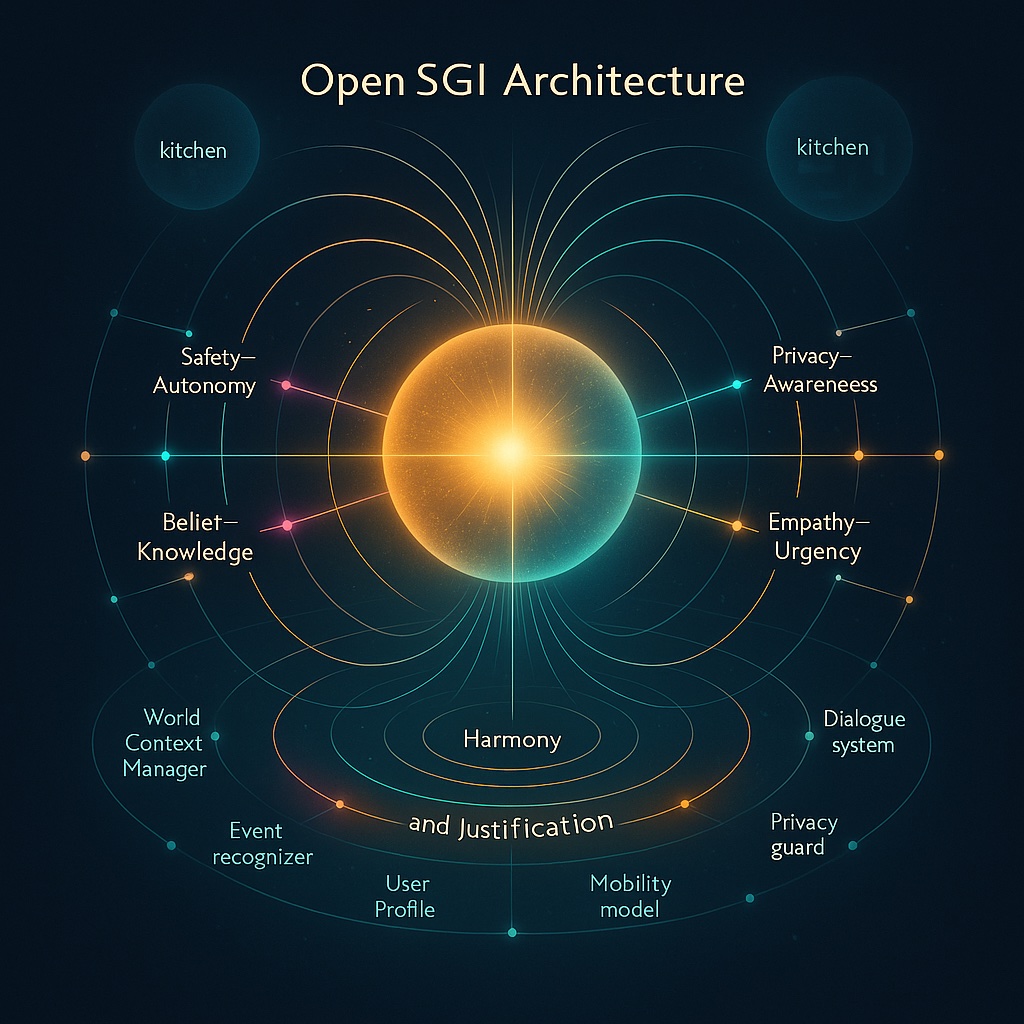

The Open SGI Architectural Overview for Personal Event Recognition MVP presents the first practical realization of the OAI² vision—an architecture grounded in the Unity–Polarity Axioms (UPA) and guided by OAII’s commitment to ethical, transparent AI. It describes a three‑layer framework (Object, Service, and Application) connecting IoT, robotics, and wearables in aging in place environments. Each layer transforms data into understanding, ensuring that every automated action is justified, safe, and harmonized with human values. This version defines the conceptual foundation for OAII certification and future Open SGI standards.

1. Purpose and Scope

This expanded architecture articulates how Open SGI, the OAI² (Open Autonomous Intelligence Initiative) reference framework, supports a real‑world application: Personal Event Recognition for Aging‑in‑Place (PER‑AIP). The system blends robotics, IoT sensors, wearable devices, and edge computing into an intelligent environment that interprets personal events (such as falls, meal preparation, or medication adherence) through UPA‑aligned reasoning.

It provides a conceptual and organizational foundation for the OAII certification ecosystem, which will later measure harmony, justification, and ethical traceability across implementations. Rather than defining code, this version focuses on narrative architecture—identifying the relationships, feedback loops, and epistemic transitions that make the system adaptive and accountable.

For example, in a typical home equipped with IoT sensors, wearables, and a small helper robot, the architecture coordinates all sensory inputs (data), interprets them contextually (information), updates beliefs about the resident’s state, and justifies actions (knowledge). This document outlines how that pipeline is governed and structured in three layers.—

2. Guiding Philosophy

The architecture is guided by the idea that genuine intelligence emerges only when opposing tendencies—autonomy and cooperation, sensing and acting, data and meaning—operate in harmony. Each subsystem embodies these opposites, and their interaction produces adaptive coherence. For instance, the household robot in the MVP must balance autonomy (self‑directed motion) with cooperation (listening to human or system requests). Similarly, data collection devices seek both precision and privacy, mediated by contextual rules.

UPA principles (A1–A15) act as design ethics and system invariants:

- A1 Unity: Every component, whether a wearable sensor or cloud analytics node, contributes to the integrity of the whole perception network.

- A2 Polarity: Sensing and acting, local edge processing and cloud reasoning, short‑term and long‑term memory remain dynamically coupled rather than separated.

- A7 Contextual Intelligence: Interpretation varies with context—motion detected in the kitchen at noon may mean meal preparation, but the same at 2 a.m. might indicate distress.

- A15 Harmony: Safety and viability are continuously evaluated; every decision must preserve both the resident’s well‑being and the system’s ethical posture.

Together these principles ensure that even simple services—like detecting a fall—reflect the broader unity of opposites: sensor reactive/immediate and reflective immediate/interpretable.

3. Architectural Layers

3.1 Object Layer — Ontological Foundations

The object layer defines what exists within the SGI world model. In the MVP, each room or sensor cluster becomes a World (Wᵢ)—a bounded semantic environment containing entities and rules. For example, W₁ may represent the kitchen world, tracking stove usage and movement patterns.

Epistemic States model the system’s evolving understanding:

- Data: raw accelerometer and video frames.

- Information: segmented events like “object on stove moved.”

- Belief: a probabilistic hypothesis—“user is cooking.”

- Knowledge: a justified conclusion—“meal preparation event confirmed, safe.”

Entities include:

- Sensors: wearable gyroscope, smart floor tile, camera node.

- Actuators: robotic assistant, voice interface, smart light.

- Agents: reasoning modules coordinating decisions across contexts.

Rules define transformations and checks—e.g., update rules interpret motion patterns; harmony rules ensure actions (like robot intervention) do not reduce comfort or autonomy.

3.2 Service Layer — Operational Integration

The service layer orchestrates flows among the objects:

- Perception Services aggregate signals at the edge hub, cleaning and synchronizing streams from IoT and wearables.

- Interpretation Services classify and label sequences into semantic events (“resident sitting,” “object dropped”).

- Belief Services update probabilistic state models; for example, a fall detector integrates multiple sensors and updates belief strength.

- Knowledge Services verify justifications—was the alert triggered by genuine distress or by the cat jumping onto a table? They log reasoning chains for later audit.

- Mapping Services (Φ) transfer models between worlds: a kitchen fall detection rule can adapt to a living‑room camera with adjusted thresholds.

- Lifecycle & Validation Services version and verify each model, ensuring explainable updates and traceable configuration changes.

3.3 Application Layer — Human and Social Interfaces

Applications embody the SGI design for human benefit:

- Personal Event Recognition MVP: A unified dashboard receives edge summaries and classifies them into high‑level states—“routine safe,” “possible anomaly,” or “confirmed emergency.”

- Edge Robotics Integration: The robot receives belief updates and acts proportionally—checking verbally before physically assisting.

- Care Network Dashboards: Provide caregivers with justified knowledge—alerts include evidence, timestamps, and harmony scores, preserving trust and transparency.

.4 Agents — Mediators of Intelligence

Agents serve as the connective tissue between the object, service, and application layers. They are context‑aware reasoning entities—not merely applications, nor only object models, but hybrid intelligences that adapt behavior across worlds. Each agent carries a partial worldview and a set of policies derived from UPA principles.

In the MVP, examples include:

- A Home Coordination Agent running on the edge hub that reconciles inputs from sensors, voice commands, and robotic status.

- A Health Context Agent embedded within wearables that interprets physiological data in relation to time and activity patterns.

- A Caregiver Interaction Agent that translates system knowledge into conversational updates or alerts for human caregivers.

Agents reason locally but remain accountable to system‑wide harmony and justification rules. They bridge entity‑specific intelligence (like a fall detector) with broader service orchestration (alerting, context switching). Functionally, an agent’s lifecycle mirrors the epistemic flow—observing (Data), interpreting (Information), updating beliefs, and issuing justified Knowledge to the service layer.

4. Expanding Horizons

Beyond these core layers lie the system’s ethical and operational infrastructure.

Governance & Certification: OAII will certify implementations through layered audits—verifying harmony (A15) scores, justification traces, and rule compliance. For the MVP, certification ensures that event recognition respects user autonomy while maintaining reliability.

Ethical Assurance: Each action links to a justification trace; e.g., if the robot approaches the user after a fall, the log includes reasoning steps and confidence values, ensuring reviewability.

Observability: All epistemic transitions are logged—when data became information, when a belief shifted, when knowledge was declared. This supports forensic analysis and continuous improvement.

Security and Privacy: Data remain world‑scoped; raw sensor data can stay on‑device while higher‑level knowledge synchronizes to the cloud. Personal identity and location are separated through anonymized world identifiers.

Scalability & Resilience: Multiple users and places can interconnect through federated learning, sharing model parameters without sharing personal data, strengthening overall robustness.

5. Future Direction

The next architecture iteration will connect narrative layers with measurable artifacts:

- Schemas & Policies: Define JSON/ASN.1 models for each epistemic state in the MVP pipeline.

- Certification Tiers: OAII will introduce progressive levels—Conceptual (architecture verified), Prototype (functional system validated), Operational (field‑tested and harmonized).

- Collective Intelligence: Expand from one home to networks of cooperating homes where SGI systems exchange world mappings (Φ) for shared safety insights.

Example roadmap for the MVP:

- Phase 1: Edge data collection and interpretation prototypes (IoT, wearables).

- Phase 2: Belief and knowledge validation through robotic interaction.

- Phase 3: Certification pilot with OAII harmony and justification metrics.

This architecture serves as a living specification—a guiding narrative that will evolve alongside the systems it describes, maintaining unity through the polarity of design freedom and certification discipline.

Leave a comment